Most senior engineers fail staff+ interviews despite doing precisely the work these roles require. As a senior engineer, you've driven consensus across hostile teams, made architectural calls with incomplete data, and turned production disasters into systematic improvements.

But when an interviewer asks you to explain that process in three minutes, the whole thing falls apart.

The problem isn't your leadership experience. It's that you've never had to articulate your judgment framework under pressure. You know how to navigate ambiguous requirements and mediate technical conflicts in real work.

But explaining that reasoning clearly while someone evaluates your every word is a skill entirely different.

This guide breaks down the most common technical leadership interview questions at staff+ levels, what interviewers evaluate, and how to structure answers demonstrating organizational impact.

1. How do you drive technical alignment across multiple teams?

Interviewers ask this to evaluate your ability to influence engineers you don't manage, reconcile competing priorities, and shepherd a single technical vision through organizational politics. Staff+ roles depend on leading without authority. The role itself is defined by cross-team influence.

The real test is whether you understand stakeholder incentives and can convert that insight into consensus.

Recognizing question variants that test the same skill

Interviewers probe the same core skill with different framing:

- Tell me about convincing multiple teams to adopt your technical approach: Tests whether you can build consensus through technical credibility rather than authority.

- Describe aligning backend and mobile timelines when both had conflicting priorities: Evaluates your ability to broker an agreement when teams have legitimate but competing needs.

- How do you handle disagreement with another team's technical direction? Probes whether you can influence technical decisions beyond your direct control.

All these are testing the same fundamental skill.

What separates weak from strong answers

Most engineers answer this wrongly by describing how they led their own team's stand-ups or code reviews. That misses the point. Interviewers want stories spanning several teams that show influence, not hierarchy.

The stakes matter. Did misalignment risk duplicate work, inconsistent user experience, or waste engineering quarters?

Building a narrative that demonstrates influence

Strong answers name the rival priorities up front.

Detail how you earned credibility through data or prototypes, and show final organization-level impact. Maybe you built a proof-of-concept that made the trade-offs concrete, or you facilitated working sessions where teams discovered shared constraints. The narrative arc moves from fragmentation to alignment through your specific actions.

Red flags include wielding positional authority ("I told them my decision was final") or skipping stakeholder perspectives.

Structure your story clearly: lay out the situation and conflicting goals, define your task, detail the actions you took to broker an agreement, and finish with quantifiable results — reduced duplicate work, unified SLAs, or accelerated release velocity.

2. Walk me through a major architectural decision and the trade-offs you considered

Interviewers ask this because they want evidence that you anchor design choices to business impact, not technical elegance. Staff+ interviews test trade-off thinking relentlessly: cost versus speed, risk versus innovation, team capability versus ideal solution, immediate needs versus long-term maintainability.

How different framings reveal the same judgment test

Several variants probe whether you can defend decisions pragmatically:

- Tell me about choosing a technically inferior solution for business reasons: Tests whether you prioritize shipping value over architectural purity.

- How do you balance technical debt against feature velocity? Evaluates your judgment about when to slow down and when to accumulate intentional debt.

- Describe an architecture that needed rework and what you would change: Probes your ability to learn from mistakes and own imperfect decisions.

Engineers fail by narrating only their solution without articulating rejected alternatives. That's a major red flag signaling shallow thinking. Interviewers assume you picked the first viable option without exploring trade-offs.

How to demonstrate pragmatic leadership

Frame your answer with a clear structure:

- Set the situation and business goal first — cut cloud spend by 30%, support 10x traffic growth, enable the mobile team to ship independently.

- Describe at least two alternatives you seriously considered, citing numbers: cost projections, latency targets, and migration timeline estimates.

- Share the result with metrics — shipped three weeks earlier, reduced operational costs by 25%, and enabled a new revenue stream.

- Own imperfections honestly — "We underestimated migration complexity" or "I'd choose serverless today given team growth" signals pragmatic leadership.

Blaming constraints or dismissing simpler approaches raises serious doubts about judgment. The goal is to demonstrate thoughtful decision-making under real constraints, not perfect architecture in a vacuum. Staff+ engineers ship solutions that work within organizational reality.

3. Tell me about a technical decision you made with incomplete information

Staff+ roles require making consequential decisions before you have complete information. Interviewers probe whether you can expose unknowns, assess risk intelligently, and still commit at the right moment — not paralysis, not recklessness.

They're testing structured thinking under uncertainty.

Common variants that probe structured thinking

Here are other common variants:

- Requirements kept changing throughout the project, but how did you make progress? Evaluates whether you can create structure from chaos.

- Two designs looked equally viable, but how did you choose? Tests your decision-making framework when data doesn't clearly favor one option.

- Describe navigating a project with constantly shifting priorities: Probes your ability to maintain forward momentum despite external uncertainty.

Candidates stumble when they claim they "always had complete information" or when they lock onto the first idea without exploring alternatives. Both reveal an inability to handle staff-level ambiguity.

Creating clarity through systematic problem decomposition

A strong response shows your framework for creating clarity. Here's how:

- Identify the critical unknowns, rank them by potential impact, run lightweight validation spikes, and time-box how long you'll wait for more data.

- Walk through specific techniques — competitive analysis, user research spikes, architectural prototypes, or reversible decisions that let you pivot cheaply.

- Frame this with a clear narrative structure that shows how you balanced risk and velocity, communicated contingency plans, and revisited assumptions as new signals arrived.

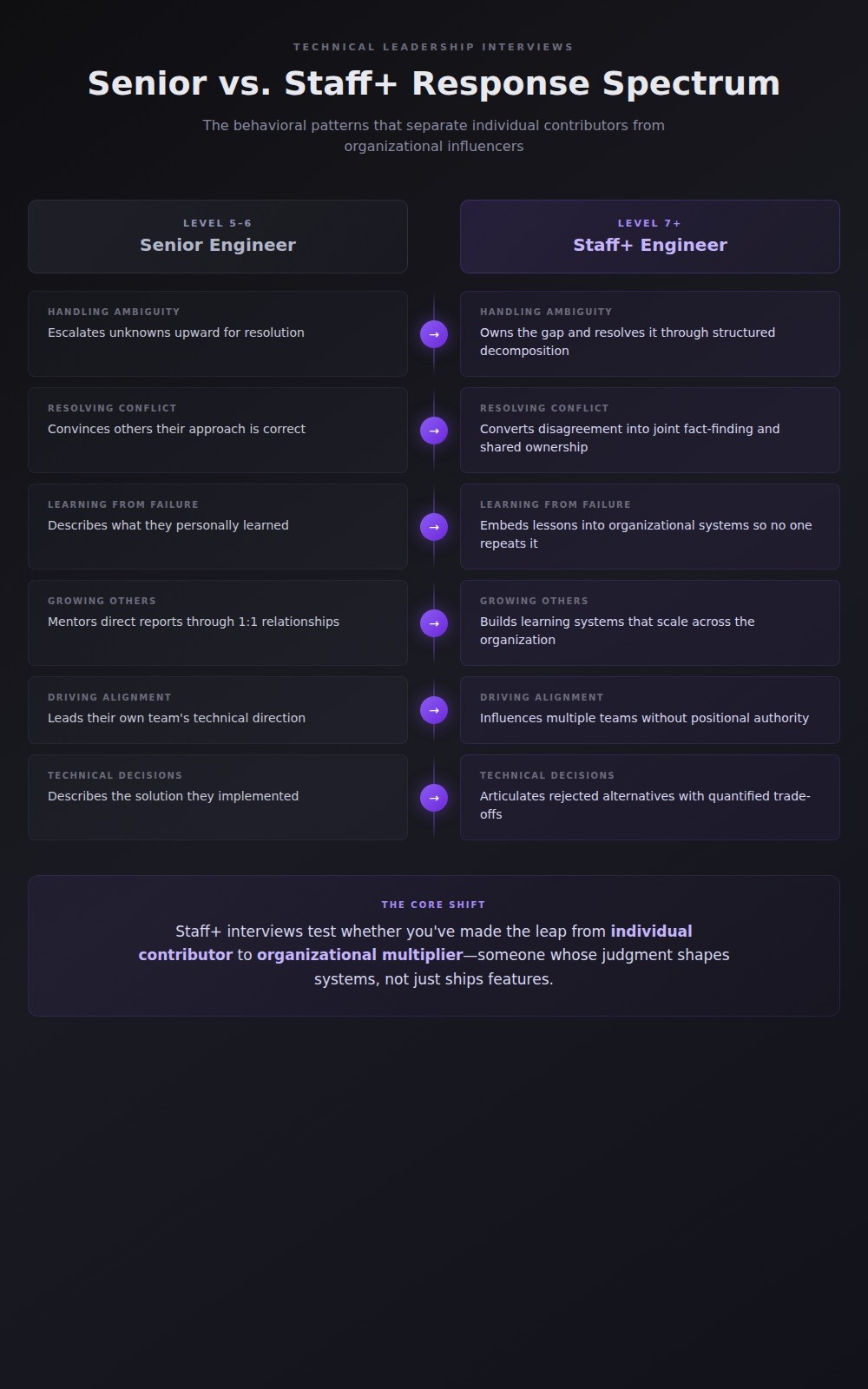

The contrast matters: a senior engineer escalates ambiguity upward; a Staff or Principal engineer owns the gap and resolves it through structured problem decomposition.

Demonstrate comfort with ambiguity without suggesting you're guessing randomly or stalling indefinitely. The skill being tested is moving forward thoughtfully when perfect information isn't available, which describes most consequential technical decisions at scale.

4. How do you handle two senior engineers who fundamentally disagree on a technical approach?

Conflict mediation appears in nearly every staff+ interview because it's core to the role. Interviewers want proof you can keep delivery moving when two high-status peers dig in their heels. They're measuring influence without authority and your ability to facilitate rather than dictate.

Creating productive disagreement through psychological safety

Strong answers begin by explaining how you created psychological safety for productive disagreement. This means setting ground rules, clarifying decision scope, and ensuring each engineer feels genuinely heard.

Weak answers skip straight to "I convinced them I was right" — a red flag suggesting fragile collaboration skills.

Next, surface the technical criteria everyone already agrees on:

- Performance targets

- Latency budgets

- Maintenance costs

- Team skill distribution

Then, turn the debate into joint fact-finding:

- What data would change minds?

- Can you prototype both approaches quickly?

- Could you A/B test in production to measure user impact?

The resolution should come from data and shared discovery, not personal opinions or positional authority. Show how you converted ego into shared ownership — a subtle leadership leap separating individual contributors from organizational influencers.

Red flags that expose conflict avoidance

Key red flags interviewers watch for:

- "I overruled them because I had more experience": Signals an inability to facilitate discussions between peers.

- "I just escalated to our director to make the call": Shows that you avoid conflict rather than resolving it.

- "I convinced both engineers my approach was superior": Reveals that you dictate solutions instead of building consensus.

Close your story with measurable outcomes: the team picked a solution, delivery stayed on track, and both engineers later co-authored the post-mortem or implementation guide. That progression demonstrates diplomacy, sound technical judgment, and the ability to turn conflict into forward momentum.

5. How do you mentor engineers across the organization, not just your direct team?

Interviewers ask this to distinguish between helpful individuals and engineers who multiply impact through others at scale. Staff+ roles depend on your ability to build learning systems across the organization, not just casual 1:1 relationships.

They're testing whether you systematically develop talent.

Testing for systems that multiply impact at scale

Here are similar variants:

- How do you share expertise with teams you don't manage? Probes whether you create learning systems that scale beyond your direct time.

- Describe a program you built to level up engineers organization-wide: Tests your ability to design repeatable frameworks for knowledge transfer.

- Tell me about helping someone grow beyond your immediate scope: Evaluates whether your influence extends across organizational boundaries.

Candidates stumble when they describe isolated coffee chats or occasional pairing sessions. That shows kindness but not organizational impact. Strong responses follow a clear structure: start with the situation that creates the need—"new microservice owners struggled with on-call quality" or "junior engineers lacked system design exposure"—then detail systematic solutions.

Maybe you launched cross-team design reviews with documented frameworks that others could use to facilitate them. Perhaps you built internal guides capturing hard-won lessons from production incidents. Or you paired senior engineers with newcomers across different teams through a structured rotation program.

Measuring success through organizational change

Deliver results with concrete numbers. Here are example metrics:

- 40% faster incident resolution after knowledge-sharing program launch

- 60% of participating engineers were promoted within 18 months

- Internal documentation cited in 200+ pull requests across teams

Staff+ engineers are judged on frameworks scaling beyond individual relationships.

Focus on systems that spread expertise faster than you personally could: regular office hours, searchable documentation, recorded tech talks, lightweight playbooks. Show how you measured success and refined your approach—proof that you're building organizations that teach themselves, not just solving individual problems repeatedly.

6. How do you balance short-term delivery pressure against long-term technical health?

Interviewers probe whether you can think beyond the next sprint while keeping the codebase maintainable for years out. Staff and Principal engineers aren't just individual contributors. They're the stewards of engineering portfolios whose future maintenance will cost real money and developer morale.

They're testing judgment under competing incentives:

- Can you recognize when strategic refactoring must pause due to an imminent customer launch?

- Do you know when to push back and reserve time for platform work to prevent cascading failures later?

The question probes for thinking that ties technical debt decisions directly to business impact.

Common variants

Common variants of this question include:

- Tell me about shipping fast and regretting it later: Tests whether you learn from rushing and can articulate the true cost.

- How did you justify rewriting a stable service? Evaluates your ability to make the business case for technical investments.

- Describe handling technical debt while maintaining feature velocity: Probes your judgment about when to pause and invest versus when to ship.

Candidates fail by presenting false binary choices — either over-engineered architecture or reckless shipping.

Moving beyond false binary choices

Strong stories describe phased approaches: document concrete risks with cost estimates, discuss them transparently with product partners, then schedule incremental remediation alongside feature work.

Quantifying future savings demonstrates thoughtful opportunity cost analysis. For example: "Cutting build times 40% after our planned Bazel migration would unblock parallel feature development worth two engineer-months quarterly." Numbers make abstract technical debt concrete for non-technical stakeholders.

Red flags are phrases like:

- "We built it perfectly upfront."

- "We'll fix it later when we have time."

Both signal failure to balance urgency with sustainability. Demonstrate that you champion pragmatic guardrails (feature toggles that enable safe rollbacks, sunset criteria for technical experiments, and regular debt audit rituals), so delivery stays swift without mortgaging the future.

7. Tell me about your biggest technical failure and what you learned

Interviewers use this to evaluate whether you own mistakes or deflect blame to circumstances, constraints, or others. They're listening for accountability, curiosity about root causes, and the ability to translate painful incidents into better engineering practice.

Three dimensions interviewers evaluate

They assess three dimensions:

- Failure depth: A production outage costing revenue reveals more than a typo in test scripts.

- Personal contribution: What decisions or assumptions led you there, not what your team or product management did.

- Learning loop: How you changed processes, tooling, or culture afterward so the organization benefited from your mistake.

Variants probe the same underlying questions:

- Describe a project that went completely off the rails: Tests your honesty about scope and your role in the failure.

- How do you handle situations where your technical judgment was wrong: Evaluates whether you own mistakes or deflect responsibility.

- Tell me about rolling back a major technical decision: Probes your ability to recognize when to cut losses and change course.

Most engineers stumble by picking trivial examples that reveal nothing, or by describing major disasters while blaming everyone except themselves: "legacy code," "unrealistic deadlines," "product kept changing requirements." Both patterns expose exactly what interviewers watch for.

Embedding lessons into organizational systems

Strong responses spotlight consequential failures and quantify impact honestly. Walk through your post-mortem actions, showing how you introduced systematic safeguards: automated rollbacks triggered by error rate spikes, observability improvements that catch similar issues earlier, and design review requirements for specific risk categories.

Senior engineers describe what they personally learned. Staff+ engineers show how they embedded that lesson into organizational systems so no one repeats it.

Frame the story with a clear structure, maintain a candid tone about your misjudgments, and let your systematic improvements prove growth. The goal is to demonstrate resilience and strategic thinking, not claiming you never make mistakes.

Contribute to AGI development at DataAnnotation

The systems thinking that helps you ace technical leadership interviews is the same thinking that shapes frontier AI models. At DataAnnotation, we operate one of the world's largest AI training marketplaces, connecting exceptional thinkers with the critical work of teaching models to reason rather than memorize.

If your background includes technical expertise, domain knowledge, or the critical thinking to evaluate complex trade-offs, AI training at DataAnnotation positions you at the frontier of AGI development.

Our coding projects start at $40+ per hour, with compensation reflecting the judgment required. Your evaluation judgments on code quality, algorithmic elegance, and edge case handling directly influence whether training runs advance model reasoning or optimize for the wrong objectives.

Over 100,000 remote workers have contributed to this infrastructure.

If you want in, getting from interested to earning takes five straightforward steps:

- Visit the DataAnnotation application page and click "Apply"

- Fill out the brief form with your background and availability

- Complete the Starter Assessment, which tests your critical thinking and coding skills

- Check your inbox for the approval decision (typically within a few days)

- Log in to your dashboard, choose your first project, and start earning

No signup fees. DataAnnotation stays selective to maintain quality standards. You can only take the Starter Assessment once, so read the instructions carefully and review before submitting.

Apply to DataAnnotation if you understand why quality beats volume in advancing frontier AI — and you have the expertise to contribute.

.jpeg)