Imagine pasting a 500-page PDF into a model and asking for a summary. It works perfectly. You try the same thing with another model and get an error message: "This document exceeds the maximum context length." You're confused.

Both are supposedly state-of-the-art AI models. Why does one handle your document while the other refuses?

The answer lies in context windows, but the real story goes deeper than the numbers you see in marketing materials.

A model with a 200,000-token context window isn't automatically better than one with a 32,000-token context window. Sometimes it's worse. Sometimes the model technically accepts your document but quietly forgets half of it. Sometimes, more context creates more confusion.

If you've ever wondered why an AI conversation suddenly goes off the rails after exchanges, why a model contradicts something it said earlier, or why summarizing a long document produces vaguer results than summarizing a short one, context windows explain all of it. Not just the token limits, but what actually happens inside those limits as context grows.

The real story isn't about how much text a model can technically ingest. It's about what happens to model behavior as that context grows, why certain lengths cause catastrophic failures, and what this means for the work we do training these systems.

What is a context window?

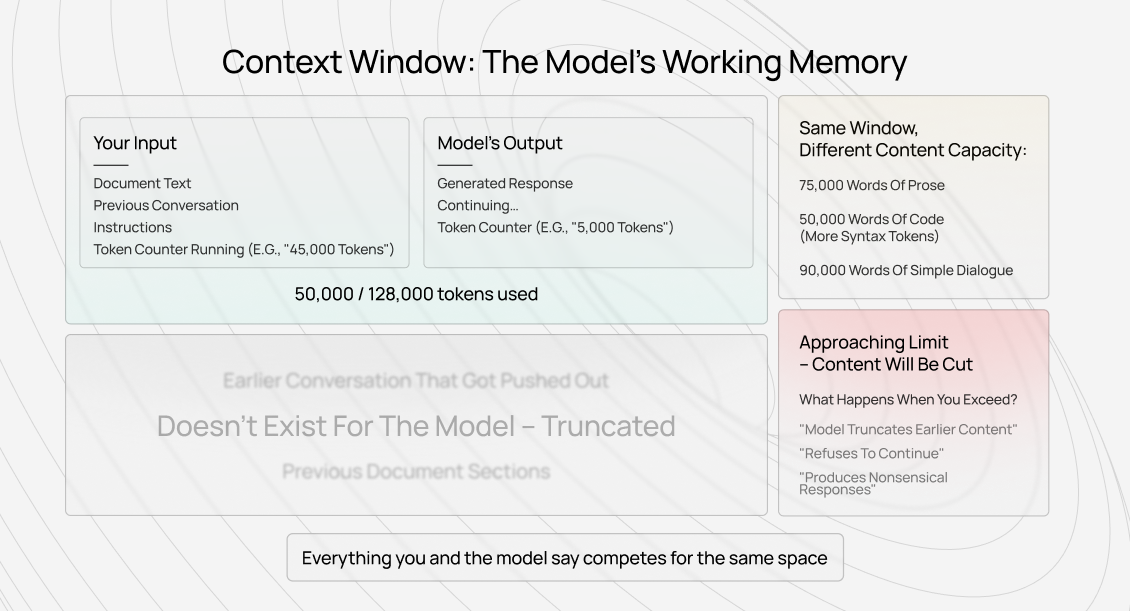

A context window is the maximum amount of text or information an LLM can process and reference in a single interaction, both the input you provide and the output it generates. Think of it as the model's working memory.

Everything outside that window simply doesn't exist for the model, no matter how relevant it might be to your task.

When you paste a document into LLMs like ChatGPT or Claude, you're loading that content into the model's context window. When the model generates a response, that response also occupies space in the same window.

The moment your combined input and output exceed the model's limit, something has to give. Either the model truncates earlier content, refuses to continue, or starts producing nonsensical responses.

Tokens as the building blocks of context

Context windows are measured in tokens, not words or characters. A token is roughly four characters in English text, but the actual count varies. "Running" might be one token, while "antidisestablishmentarianism" could be four. Code uses tokens differently from prose. Special characters often consume tokens inefficiently.

This matters because the same context window allows you to fit different amounts of actual content depending on what you're working with. A 100,000-token window might hold 75,000 words of a novel, 50,000 words of Python code with syntax tokens, or 90,000 words of simple dialogue.

When we design annotation tasks involving long documents, we're constantly calculating whether the content will fit, not just the word count, but the token density of what researchers need to be evaluated.

Why context windows determine task complexity

The practical impact shows up daily in our work. A researcher once wanted us to evaluate how well a model follows instructions across a 20-page technical specification. With a 4,000-token context window, we'd need to split it into chunks, which would break the thread of consistency.

With a 100,000-token window, we can load the entire spec and test whether the model remembers the voltage requirements from page 2 when answering questions about page 18.

Longer context windows don't just mean more text. They enable qualitatively different tasks. You can evaluate cross-document reasoning, test long-term consistency, verify that code changes don't break functions defined 30,000 tokens earlier, or check whether a legal argument contradicts a precedent cited at the conversation start.

Context window sizes of prominent LLMs

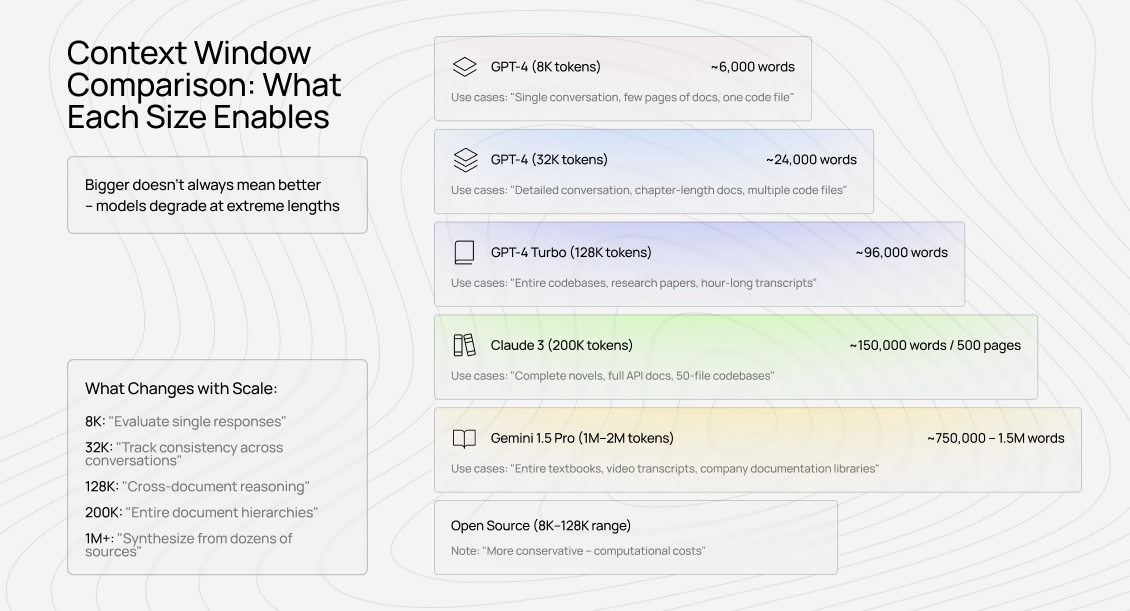

The numbers vary wildly across models, and those differences determine what you can actually build or evaluate.

Let’s see how different models compare on context length.

GPT-4 and GPT-4 Turbo

GPT-4 launched with two variants: an 8,000-token model for most conversations and a 32,000-token model for longer documents. GPT-4 Turbo expanded this to 128,000 tokens, which is a 16x increase that fundamentally changed what developers could build.

At 8,000 tokens, you're working with roughly 6,000 words of input/output. Enough for a detailed conversation, a few pages of documentation, or a single code file. At 128,000 tokens, you can fit entire codebases, full research papers, or transcripts of hour-long meetings.

The annotation tasks shifted from "evaluate this response" to "evaluate consistency across this entire document hierarchy."

Claude 3 family

Claude 3 models were launched with 200,000-token context windows, roughly 150,000 words or 500 pages of text.

This became the new baseline for serious long-context work. I've personally reviewed Claude annotations involving entire novels, complete API documentation sets, and multi-file codebases where the model needed to track dependencies across 50 different files.

What's interesting from a training perspective is that longer context doesn't just mean more content — it means more opportunities for the model to fail in subtle ways. A model might handle 50,000 tokens perfectly, then start hallucinating at 120,000 tokens.

Testing this requires AI trainers who can spot when a model contradicts information from 80,000 tokens ago.

Gemini models

Gemini 1.5 Pro pushed context windows to 1 million tokens in experimental releases, later stabilizing around 2 million tokens in production. At that scale, you're talking about entire textbooks, complete video transcripts with timestamps, or comprehensive code repositories.

We've worked on some Gemini projects where the task was literally "load this company's entire documentation library and see if the model can find contradictions between documents from different years."

The work at that scale requires different skills — not just evaluating whether a response is good, but whether it correctly synthesized information from 50 disparate sources loaded simultaneously.

Open source leaders

Llama 3 variants typically operate in the 8,000-32,000 token range, while models like Mistral and Command R+ have pushed toward 128,000 tokens. The open-source world moves more conservatively on context windows because computational costs scale brutally with length.

From an annotation standpoint, open source models often require more careful context management. Where Claude might gracefully handle 150,000 tokens, an open source model might start degrading at 20,000.

This means annotation tasks need more precise scoping, as we can't just throw a full document at the model and expect consistent behavior across the entire range.

Why do models have a maximum context window length?

The limits aren't arbitrary. They stem from fundamental architectural constraints that determine both what's computationally possible and what produces valuable results. Understanding why these limits exist explains why longer context windows don't automatically improve models, and why annotation work becomes more complex as windows expand.

Computational constraints scale quadratically

Transformer models use self-attention mechanisms where every token attends to every other token in the context. This means computational costs scale quadratically with context length. Doubling the context window quadruples the computation required.

I saw this viscerally when working with researchers testing a model at 500,000 tokens. The inference time went from seconds to minutes. For annotation work, this matters because tasks that seem simple, such as "evaluate this model's response," suddenly take 20 times longer to process.

When we're designing training data at scale, computational costs determine what's feasible. A task that works at 8,000 tokens might be prohibitively expensive at 128,000 tokens.

Memory requirements grow exponentially

Context doesn't just require computation; it also involves memory. The model needs to store attention patterns, intermediate activations, and key-value caches for every token in the context. As windows expand, memory requirements balloon.

This creates a practical ceiling on the hardware that can support it. Even with the most advanced GPUs, there's a hard limit to how much context you can fit in memory. Training models on long contexts is even more constrained — the memory requirements during training can be 10-100x higher than during inference.

For those of us creating training data, this translates into specific constraints imposed by researchers. They'll ask: "Can you design this task to fit in 100,000 tokens instead of 150,000?" Not because the larger context wouldn't be better, but because their hardware literally cannot process it at the scale they need for training.

Quality degradation at extreme lengths

Here's what the research papers don't emphasize enough: models get worse as context grows, even within their stated limits. We call this the "lost in the middle" problem: models perform well on information at the start and end of their context window but struggle with information buried in the middle.

I've reviewed hundreds of annotations where the model perfectly recalled a fact from the first 1,000 tokens, correctly used information from the last 10,000 tokens, but completely missed a crucial detail at token 50,000.

The attention mechanism doesn't distribute evenly across the entire context. Instead, it concentrates on recent tokens and occasionally reaches back to the beginning.

This creates annotation challenges that pure context window numbers miss.

A model might technically support 200,000 tokens, but its effective context (where it reliably attends to information) might be only 100,000. Our AI trainers need to test not just whether information is in the context window, but whether the model actually uses it.

How context window limitations impact AI training work

The technical constraints directly inform how we design and evaluate the training data. Context windows don't just determine what's possible for end users — they shape every annotation task, evaluation rubric, and quality metric we use to train models.

Task scope expands with context window growth

Five years ago, most annotation tasks fit comfortably in 2,000-4,000 tokens. You'd evaluate a single response to a single question. The task was discrete, contained, and rarely required tracking information across multiple interactions.

Now?

Standard annotation tasks regularly involve 50,000-100,000 token contexts.

We're asking AI trainers to evaluate whether a model maintained consistency across a 30-page specification, whether code suggestions account for functions defined in different files, and whether legal reasoning stays aligned with precedents cited 80,000 tokens earlier.

This shift occurred because longer context windows enabled new capabilities that labs wanted to test and train on. Once Claude could handle 200,000 tokens, researchers wanted training data that pushed those limits. Once Gemini reached 1 million tokens, labs needed AI trainers who could evaluate model behavior at that scale.

The work fundamentally changed. It's not just longer; it's qualitatively different. Annotators need to hold complex document structures in memory, track cross-references across dozens of pages, and spot consistency failures that span massive contexts.

The skill requirements jumped alongside the token counts.

What this means for task complexity and pay tiers

Context window growth directly drove the expansion of specialized tiers in AI training work. When tasks fit in 4,000 tokens, generalist AI trainers could handle most work. When tasks expanded to 100,000+ tokens across technical domains, labs needed experts who could actually parse that much context and evaluate it correctly.

We see this in compensation structures. Basic AI training tasks at DataAnnotation (short contexts, clear rubrics, straightforward evaluation) start at $20/hour because the cognitive load remains manageable.

But long-context expert tasks requiring domain expertise? Those justify starting at $40-50/hour because they're fundamentally more demanding.

The complexity manifests in subtle ways.

Evaluating a 500-token code snippet requires understanding that specific function. Evaluating a 50,000-token codebase requires understanding the entire architecture, tracking dependencies across files, and recognizing when changes in one section break assumptions elsewhere.

The annotation time doesn't scale linearly; reviewing 100x more tokens might require 200x more cognitive effort because the number of interconnections multiplies.

The skills that matter when working with long context

Long-context annotation work rewards specific capabilities that shorter tasks don't emphasize

- You need a strong working memory to hold the document structure across dozens of pages.

- You need systematic attention to detail to catch consistency failures that 50,000 tokens might separate.

- You need domain expertise to evaluate whether technical information 80,000 tokens deep is being used correctly.

Pattern recognition becomes crucial. Good long-context AI trainers develop intuition for where models typically fail. They know to check the middle sections carefully because of the "lost in the middle" problem.

They verify that models actually used specific context rather than generating plausible-sounding responses from training data. They spot when attention patterns degrade by noticing changes in response quality as context grows.

These aren't skills you can teach quickly. They develop through experience with hundreds of long-context tasks. This creates interesting career implications — as context windows continue expanding (and they will), the gap widens between AI trainers who can handle this complexity and those who can't.

The work gets more specialized, not less. The expertise becomes more valuable, not commoditized.

Contribute to AGI development at DataAnnotation

The real frontier isn't how many tokens we can stuff into a context window. It's whether models can maintain consistent, high-quality behavior across whatever context they're given. And that question: does the model actually use its context well? This is what makes long-context AI training work both challenging and valuable.

If your background includes technical expertise, domain knowledge, or the critical thinking to evaluate complex trade-offs, AI training at DataAnnotation positions you at the frontier of AGI development.

Over 100,000 remote workers have contributed to this infrastructure.

If you want in, getting from interested to earning takes five straightforward steps:

- Visit the DataAnnotation application page and click "Apply"

- Fill out the brief form with your background and availability

- Complete the Starter Assessment, which tests your critical thinking skills

- Check your inbox for the approval decision (typically within a few days)

- Log in to your dashboard, choose your first project, and start earning

No signup fees. We stay selective to maintain quality standards. You can only take the Starter Assessment once, so read the instructions carefully and review before submitting.

Apply to DataAnnotation if you understand why quality beats volume in advancing frontier AI — and you have the expertise to contribute.